Posted on May 16 2017

Sanitation practices that keep your production environment clean can also improve irrigation quality. Debris from crop planting, residue from equipment and other forms of contamination should be removed before it gets into the water. Contaminants that enter water storage and distribution plumbing leave physical, chemical and biological residues. These residues can make your water unsanitary or “dirty.” Dirty water can impede irrigation, limit the efficacy of treatment and increase the risk of plant pathogens. Treatment control and monitoring water quality can help your water take shape for irrigation.

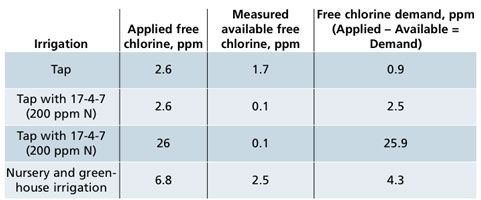

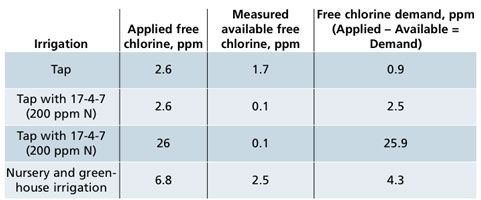

Water for irrigation should come from a reliable supply that can deliver a sufficient volume into the irrigation system. There, it’s treated, stored, distributed and applied to the crop. Throughout irrigation systems are control points that can be monitored and adjusted to improve water quality. With each treatment, the water should become more conditioned to be in better shape for irrigation. Irrigation should meet hydration and nutrition requirements of the crop, with low risk of disease. Table 1. Irrigation with tap (potable) water only or tap water with 17-4-7 (N: P: K) at 200 ppm Nitrogen from ammonium nitrate. Applied free chlorine was 2.6 ppm and then measured available free chlorine five seconds later. The free chlorine demand was determined from: Applied – Available = Demand. The last row shows that when additional applied free chlorine of 26 ppm it provided a measured available free chlorine near zero. The free chlorine demand from fertilizer solutions that contain ammonium can be excessive and most of the chlorine was rapidly transformed into combined chlorine. The last row shows an averaged free chlorine demand of 4.3 ppm is typical of nursery and greenhouse irrigation.

About half of the water treatment technologies can provide reliable irrigation. Problems with scale and biofilm are prominent throughout most irrigation systems, resulting in limited flow through the irrigation system and clogged emitters. Crop health is often compromised from elevated alkalinity, salt buildup of waste ions and nutritional deficiencies. Practices that improve plant health and overall sanitation can help to decrease contaminants and improve efficacy of treatments. The treatment technologies available to improve water quality may be limited by poor physical, chemical and biological water quality.

• Chemical control of pH can be used to neutralize water alkalinity, decrease mineral deposits (scale) and increase plant-available nutrients. Nutrient management also relies on the electro-conductivity (EC) of the water. Monitoring these control points can provide information for the appropriate level of control needed for crop nutrition.

• Physical control of debris and particulates may require additional filtration and/or basins to separate suspended and settled solids. Monitoring at these points can identify problems before water distribution becomes impeded in the irrigation system.

• Biological control can be used to mitigate algae, bacteria, fungi and molds that cause biofilm in irrigation systems. Monitoring at points in the irrigation system can identify changes in biological conditions that result with inconsistencies in flow and potential to harbor pathogenic species.

• Disinfestant control can help to mitigate pathogens before they reach the crop. Monitoring the levels of active ingredients (AI) and the sanitizing strength, measured oxidation reduction potential (ORP) can be used determine potential for disinfestation of pathogens.

Disinfestation technologies are often installed as a response to a previous crop disease, suspected to be pathogens. Treatment systems can seem like a form of insurance to the buyers that the problem won’t happen again. There should be caution against a sense of complacency, as all treatment technologies have limitations and considerations for efficacy. There is no “one-size-fits-all” disinfestation technology. This is due to differences in water quality and combinations of treatments.

Table 1. Irrigation with tap (potable) water only or tap water with 17-4-7 (N: P: K) at 200 ppm Nitrogen from ammonium nitrate. Applied free chlorine was 2.6 ppm and then measured available free chlorine five seconds later. The free chlorine demand was determined from: Applied – Available = Demand. The last row shows that when additional applied free chlorine of 26 ppm it provided a measured available free chlorine near zero. The free chlorine demand from fertilizer solutions that contain ammonium can be excessive and most of the chlorine was rapidly transformed into combined chlorine. The last row shows an averaged free chlorine demand of 4.3 ppm is typical of nursery and greenhouse irrigation.

About half of the water treatment technologies can provide reliable irrigation. Problems with scale and biofilm are prominent throughout most irrigation systems, resulting in limited flow through the irrigation system and clogged emitters. Crop health is often compromised from elevated alkalinity, salt buildup of waste ions and nutritional deficiencies. Practices that improve plant health and overall sanitation can help to decrease contaminants and improve efficacy of treatments. The treatment technologies available to improve water quality may be limited by poor physical, chemical and biological water quality.

• Chemical control of pH can be used to neutralize water alkalinity, decrease mineral deposits (scale) and increase plant-available nutrients. Nutrient management also relies on the electro-conductivity (EC) of the water. Monitoring these control points can provide information for the appropriate level of control needed for crop nutrition.

• Physical control of debris and particulates may require additional filtration and/or basins to separate suspended and settled solids. Monitoring at these points can identify problems before water distribution becomes impeded in the irrigation system.

• Biological control can be used to mitigate algae, bacteria, fungi and molds that cause biofilm in irrigation systems. Monitoring at points in the irrigation system can identify changes in biological conditions that result with inconsistencies in flow and potential to harbor pathogenic species.

• Disinfestant control can help to mitigate pathogens before they reach the crop. Monitoring the levels of active ingredients (AI) and the sanitizing strength, measured oxidation reduction potential (ORP) can be used determine potential for disinfestation of pathogens.

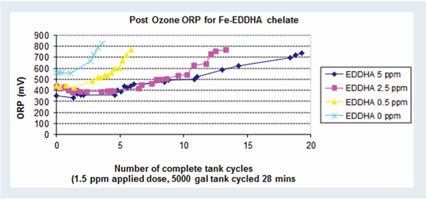

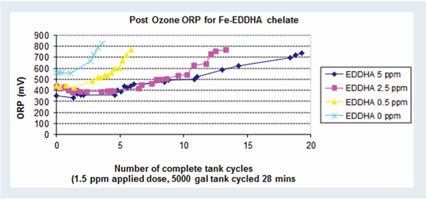

Disinfestation technologies are often installed as a response to a previous crop disease, suspected to be pathogens. Treatment systems can seem like a form of insurance to the buyers that the problem won’t happen again. There should be caution against a sense of complacency, as all treatment technologies have limitations and considerations for efficacy. There is no “one-size-fits-all” disinfestation technology. This is due to differences in water quality and combinations of treatments.  Figure 1. Ozone was applied at 1.5 ppm with each tank cycle of (x-axis) to water that contained fertilizer iron, chelated with EDDHA at different rates (0, 0.5, 2.5 and 5 ppm). Each fertilizer solution was ozonated until it reached the target oxidation reduction potential (ORP) of 650 to 750 mV (y-axis).

Residual concentration of sanitizing chemicals can be determined by the percent reduction from treatment dose to the residual that remains after time or distance. For example, sodium hypochlorite, the active ingredient in common household bleach, is a widely used to control waterborne pathogens and algae in irrigation water. Once added to water, sodium hypochlorite is converted to hypochlorous acid (HOCl) and the hypochlorite ion (ClO-). The balance is determined by the pH of the water; hypochlorous acid predominates in acidic pH (pH below 7), which is more effective for disinfestation than hypochlorite (pH above 7.5). The amount of hypochlorous acid can be measured as the free chlorine residual (ppm). This represents the chlorine available to disinfest plant pathogens, however, it decreases over time from reactions with organic matter, microorganisms, ammonium and other environmental factors.

Disinfestation monitoring when using chlorine should consider the form of chlorine, concentration and contact time. The concentration of chlorine can be monitored as free chlorine, chlorine dioxide and/or total chlorine. Research indicates control of Pythium and Phytophthora zoospores can be achieved in irrigation water with a measurable free chlorine level of 2.5 ppm at a pH of 6.5. This assumes that there was a minimum contact time of two to 10 minutes with free chlorine.

The disinfestation strength of oxidizers—such as free chlorine, chlorine dioxide and ozone—can also be estimated from the oxidation reduction potential (ORP). An ORP reading over 650 mV is used as guidelines to control human pathogens, such as Escherichia coli and Salmonella. For vegetable and fruit wash in postharvest processing, an ORP of 650 to 700 mV is recommended to kill bacterial contaminants. Effective disinfestation may be limited by a poor physical, chemical and biological water quality.

Similar mortality curves have been shown for Pythium zoospores at ORP of 780 mV provided by the dose of free chlorine at 0.5 to 2 ppm. At this rate with free chlorine, there have been few incidents of phytotoxicity in ornamental crops; therefore, the guideline is a measurable 2.5 ppm free chlorine at discharge points (risers or sprinklers) and pH 6. This will help mitigate zoospores of many Pythium and Phytophthora in irrigation water.

Treatments that we make to the water will interact with each other and can have unintended results. The possible reactions with disinfestation technologies and your fertilizer type, concentration and other water quality factors should be tested. Monitoring programs should be developed to evaluate disinfestation strength when using treatment technologies. Regular monitoring can help to determine effective treatment rates to help control water delivery, quality and pathogen disinfestation with lower risk for crop phytotoxicity, oxidation of equipment or other issues from excessive water treatment.

Develop an irrigation monitoring program to help determine an appropriate treatment technology and rate, based on if the disinfestant demands it from debris, microbes and chemicals. The time and money invested in monitoring will likely be returned from savings on chemical costs and improved plant health. GT

Dr. Dustin Meador is the Executive Director for the Center for Applied Horticultural Research in Vista, California.

Original Article by Dr. Dustin Meador

Originally published in Grower Talks magazine

May 2017

Used with permission.

Figure 1. Ozone was applied at 1.5 ppm with each tank cycle of (x-axis) to water that contained fertilizer iron, chelated with EDDHA at different rates (0, 0.5, 2.5 and 5 ppm). Each fertilizer solution was ozonated until it reached the target oxidation reduction potential (ORP) of 650 to 750 mV (y-axis).

Residual concentration of sanitizing chemicals can be determined by the percent reduction from treatment dose to the residual that remains after time or distance. For example, sodium hypochlorite, the active ingredient in common household bleach, is a widely used to control waterborne pathogens and algae in irrigation water. Once added to water, sodium hypochlorite is converted to hypochlorous acid (HOCl) and the hypochlorite ion (ClO-). The balance is determined by the pH of the water; hypochlorous acid predominates in acidic pH (pH below 7), which is more effective for disinfestation than hypochlorite (pH above 7.5). The amount of hypochlorous acid can be measured as the free chlorine residual (ppm). This represents the chlorine available to disinfest plant pathogens, however, it decreases over time from reactions with organic matter, microorganisms, ammonium and other environmental factors.

Disinfestation monitoring when using chlorine should consider the form of chlorine, concentration and contact time. The concentration of chlorine can be monitored as free chlorine, chlorine dioxide and/or total chlorine. Research indicates control of Pythium and Phytophthora zoospores can be achieved in irrigation water with a measurable free chlorine level of 2.5 ppm at a pH of 6.5. This assumes that there was a minimum contact time of two to 10 minutes with free chlorine.

The disinfestation strength of oxidizers—such as free chlorine, chlorine dioxide and ozone—can also be estimated from the oxidation reduction potential (ORP). An ORP reading over 650 mV is used as guidelines to control human pathogens, such as Escherichia coli and Salmonella. For vegetable and fruit wash in postharvest processing, an ORP of 650 to 700 mV is recommended to kill bacterial contaminants. Effective disinfestation may be limited by a poor physical, chemical and biological water quality.

Similar mortality curves have been shown for Pythium zoospores at ORP of 780 mV provided by the dose of free chlorine at 0.5 to 2 ppm. At this rate with free chlorine, there have been few incidents of phytotoxicity in ornamental crops; therefore, the guideline is a measurable 2.5 ppm free chlorine at discharge points (risers or sprinklers) and pH 6. This will help mitigate zoospores of many Pythium and Phytophthora in irrigation water.

Treatments that we make to the water will interact with each other and can have unintended results. The possible reactions with disinfestation technologies and your fertilizer type, concentration and other water quality factors should be tested. Monitoring programs should be developed to evaluate disinfestation strength when using treatment technologies. Regular monitoring can help to determine effective treatment rates to help control water delivery, quality and pathogen disinfestation with lower risk for crop phytotoxicity, oxidation of equipment or other issues from excessive water treatment.

Develop an irrigation monitoring program to help determine an appropriate treatment technology and rate, based on if the disinfestant demands it from debris, microbes and chemicals. The time and money invested in monitoring will likely be returned from savings on chemical costs and improved plant health. GT

Dr. Dustin Meador is the Executive Director for the Center for Applied Horticultural Research in Vista, California.

Original Article by Dr. Dustin Meador

Originally published in Grower Talks magazine

May 2017

Used with permission.

Table 1. Irrigation with tap (potable) water only or tap water with 17-4-7 (N: P: K) at 200 ppm Nitrogen from ammonium nitrate. Applied free chlorine was 2.6 ppm and then measured available free chlorine five seconds later. The free chlorine demand was determined from: Applied – Available = Demand. The last row shows that when additional applied free chlorine of 26 ppm it provided a measured available free chlorine near zero. The free chlorine demand from fertilizer solutions that contain ammonium can be excessive and most of the chlorine was rapidly transformed into combined chlorine. The last row shows an averaged free chlorine demand of 4.3 ppm is typical of nursery and greenhouse irrigation.

About half of the water treatment technologies can provide reliable irrigation. Problems with scale and biofilm are prominent throughout most irrigation systems, resulting in limited flow through the irrigation system and clogged emitters. Crop health is often compromised from elevated alkalinity, salt buildup of waste ions and nutritional deficiencies. Practices that improve plant health and overall sanitation can help to decrease contaminants and improve efficacy of treatments. The treatment technologies available to improve water quality may be limited by poor physical, chemical and biological water quality.

• Chemical control of pH can be used to neutralize water alkalinity, decrease mineral deposits (scale) and increase plant-available nutrients. Nutrient management also relies on the electro-conductivity (EC) of the water. Monitoring these control points can provide information for the appropriate level of control needed for crop nutrition.

• Physical control of debris and particulates may require additional filtration and/or basins to separate suspended and settled solids. Monitoring at these points can identify problems before water distribution becomes impeded in the irrigation system.

• Biological control can be used to mitigate algae, bacteria, fungi and molds that cause biofilm in irrigation systems. Monitoring at points in the irrigation system can identify changes in biological conditions that result with inconsistencies in flow and potential to harbor pathogenic species.

• Disinfestant control can help to mitigate pathogens before they reach the crop. Monitoring the levels of active ingredients (AI) and the sanitizing strength, measured oxidation reduction potential (ORP) can be used determine potential for disinfestation of pathogens.

Disinfestation technologies are often installed as a response to a previous crop disease, suspected to be pathogens. Treatment systems can seem like a form of insurance to the buyers that the problem won’t happen again. There should be caution against a sense of complacency, as all treatment technologies have limitations and considerations for efficacy. There is no “one-size-fits-all” disinfestation technology. This is due to differences in water quality and combinations of treatments.

Table 1. Irrigation with tap (potable) water only or tap water with 17-4-7 (N: P: K) at 200 ppm Nitrogen from ammonium nitrate. Applied free chlorine was 2.6 ppm and then measured available free chlorine five seconds later. The free chlorine demand was determined from: Applied – Available = Demand. The last row shows that when additional applied free chlorine of 26 ppm it provided a measured available free chlorine near zero. The free chlorine demand from fertilizer solutions that contain ammonium can be excessive and most of the chlorine was rapidly transformed into combined chlorine. The last row shows an averaged free chlorine demand of 4.3 ppm is typical of nursery and greenhouse irrigation.

About half of the water treatment technologies can provide reliable irrigation. Problems with scale and biofilm are prominent throughout most irrigation systems, resulting in limited flow through the irrigation system and clogged emitters. Crop health is often compromised from elevated alkalinity, salt buildup of waste ions and nutritional deficiencies. Practices that improve plant health and overall sanitation can help to decrease contaminants and improve efficacy of treatments. The treatment technologies available to improve water quality may be limited by poor physical, chemical and biological water quality.

• Chemical control of pH can be used to neutralize water alkalinity, decrease mineral deposits (scale) and increase plant-available nutrients. Nutrient management also relies on the electro-conductivity (EC) of the water. Monitoring these control points can provide information for the appropriate level of control needed for crop nutrition.

• Physical control of debris and particulates may require additional filtration and/or basins to separate suspended and settled solids. Monitoring at these points can identify problems before water distribution becomes impeded in the irrigation system.

• Biological control can be used to mitigate algae, bacteria, fungi and molds that cause biofilm in irrigation systems. Monitoring at points in the irrigation system can identify changes in biological conditions that result with inconsistencies in flow and potential to harbor pathogenic species.

• Disinfestant control can help to mitigate pathogens before they reach the crop. Monitoring the levels of active ingredients (AI) and the sanitizing strength, measured oxidation reduction potential (ORP) can be used determine potential for disinfestation of pathogens.

Disinfestation technologies are often installed as a response to a previous crop disease, suspected to be pathogens. Treatment systems can seem like a form of insurance to the buyers that the problem won’t happen again. There should be caution against a sense of complacency, as all treatment technologies have limitations and considerations for efficacy. There is no “one-size-fits-all” disinfestation technology. This is due to differences in water quality and combinations of treatments.  Figure 1. Ozone was applied at 1.5 ppm with each tank cycle of (x-axis) to water that contained fertilizer iron, chelated with EDDHA at different rates (0, 0.5, 2.5 and 5 ppm). Each fertilizer solution was ozonated until it reached the target oxidation reduction potential (ORP) of 650 to 750 mV (y-axis).

Residual concentration of sanitizing chemicals can be determined by the percent reduction from treatment dose to the residual that remains after time or distance. For example, sodium hypochlorite, the active ingredient in common household bleach, is a widely used to control waterborne pathogens and algae in irrigation water. Once added to water, sodium hypochlorite is converted to hypochlorous acid (HOCl) and the hypochlorite ion (ClO-). The balance is determined by the pH of the water; hypochlorous acid predominates in acidic pH (pH below 7), which is more effective for disinfestation than hypochlorite (pH above 7.5). The amount of hypochlorous acid can be measured as the free chlorine residual (ppm). This represents the chlorine available to disinfest plant pathogens, however, it decreases over time from reactions with organic matter, microorganisms, ammonium and other environmental factors.

Disinfestation monitoring when using chlorine should consider the form of chlorine, concentration and contact time. The concentration of chlorine can be monitored as free chlorine, chlorine dioxide and/or total chlorine. Research indicates control of Pythium and Phytophthora zoospores can be achieved in irrigation water with a measurable free chlorine level of 2.5 ppm at a pH of 6.5. This assumes that there was a minimum contact time of two to 10 minutes with free chlorine.

The disinfestation strength of oxidizers—such as free chlorine, chlorine dioxide and ozone—can also be estimated from the oxidation reduction potential (ORP). An ORP reading over 650 mV is used as guidelines to control human pathogens, such as Escherichia coli and Salmonella. For vegetable and fruit wash in postharvest processing, an ORP of 650 to 700 mV is recommended to kill bacterial contaminants. Effective disinfestation may be limited by a poor physical, chemical and biological water quality.

Similar mortality curves have been shown for Pythium zoospores at ORP of 780 mV provided by the dose of free chlorine at 0.5 to 2 ppm. At this rate with free chlorine, there have been few incidents of phytotoxicity in ornamental crops; therefore, the guideline is a measurable 2.5 ppm free chlorine at discharge points (risers or sprinklers) and pH 6. This will help mitigate zoospores of many Pythium and Phytophthora in irrigation water.

Treatments that we make to the water will interact with each other and can have unintended results. The possible reactions with disinfestation technologies and your fertilizer type, concentration and other water quality factors should be tested. Monitoring programs should be developed to evaluate disinfestation strength when using treatment technologies. Regular monitoring can help to determine effective treatment rates to help control water delivery, quality and pathogen disinfestation with lower risk for crop phytotoxicity, oxidation of equipment or other issues from excessive water treatment.

Develop an irrigation monitoring program to help determine an appropriate treatment technology and rate, based on if the disinfestant demands it from debris, microbes and chemicals. The time and money invested in monitoring will likely be returned from savings on chemical costs and improved plant health. GT

Dr. Dustin Meador is the Executive Director for the Center for Applied Horticultural Research in Vista, California.

Original Article by Dr. Dustin Meador

Originally published in Grower Talks magazine

May 2017

Used with permission.

Figure 1. Ozone was applied at 1.5 ppm with each tank cycle of (x-axis) to water that contained fertilizer iron, chelated with EDDHA at different rates (0, 0.5, 2.5 and 5 ppm). Each fertilizer solution was ozonated until it reached the target oxidation reduction potential (ORP) of 650 to 750 mV (y-axis).

Residual concentration of sanitizing chemicals can be determined by the percent reduction from treatment dose to the residual that remains after time or distance. For example, sodium hypochlorite, the active ingredient in common household bleach, is a widely used to control waterborne pathogens and algae in irrigation water. Once added to water, sodium hypochlorite is converted to hypochlorous acid (HOCl) and the hypochlorite ion (ClO-). The balance is determined by the pH of the water; hypochlorous acid predominates in acidic pH (pH below 7), which is more effective for disinfestation than hypochlorite (pH above 7.5). The amount of hypochlorous acid can be measured as the free chlorine residual (ppm). This represents the chlorine available to disinfest plant pathogens, however, it decreases over time from reactions with organic matter, microorganisms, ammonium and other environmental factors.

Disinfestation monitoring when using chlorine should consider the form of chlorine, concentration and contact time. The concentration of chlorine can be monitored as free chlorine, chlorine dioxide and/or total chlorine. Research indicates control of Pythium and Phytophthora zoospores can be achieved in irrigation water with a measurable free chlorine level of 2.5 ppm at a pH of 6.5. This assumes that there was a minimum contact time of two to 10 minutes with free chlorine.

The disinfestation strength of oxidizers—such as free chlorine, chlorine dioxide and ozone—can also be estimated from the oxidation reduction potential (ORP). An ORP reading over 650 mV is used as guidelines to control human pathogens, such as Escherichia coli and Salmonella. For vegetable and fruit wash in postharvest processing, an ORP of 650 to 700 mV is recommended to kill bacterial contaminants. Effective disinfestation may be limited by a poor physical, chemical and biological water quality.

Similar mortality curves have been shown for Pythium zoospores at ORP of 780 mV provided by the dose of free chlorine at 0.5 to 2 ppm. At this rate with free chlorine, there have been few incidents of phytotoxicity in ornamental crops; therefore, the guideline is a measurable 2.5 ppm free chlorine at discharge points (risers or sprinklers) and pH 6. This will help mitigate zoospores of many Pythium and Phytophthora in irrigation water.

Treatments that we make to the water will interact with each other and can have unintended results. The possible reactions with disinfestation technologies and your fertilizer type, concentration and other water quality factors should be tested. Monitoring programs should be developed to evaluate disinfestation strength when using treatment technologies. Regular monitoring can help to determine effective treatment rates to help control water delivery, quality and pathogen disinfestation with lower risk for crop phytotoxicity, oxidation of equipment or other issues from excessive water treatment.

Develop an irrigation monitoring program to help determine an appropriate treatment technology and rate, based on if the disinfestant demands it from debris, microbes and chemicals. The time and money invested in monitoring will likely be returned from savings on chemical costs and improved plant health. GT

Dr. Dustin Meador is the Executive Director for the Center for Applied Horticultural Research in Vista, California.

Original Article by Dr. Dustin Meador

Originally published in Grower Talks magazine

May 2017

Used with permission.

0 comments